Example of an IAB Implementation: End-of-Unit Assessment

In this section, we provide an example of how an educator might use one of the IABs to improve teaching and learning in her classroom. Included in this example are screenshots from the Smarter Reporting System that illustrate the different views available to educators to analyze the data and interpret it within their local context. Results will be analyzed at the group level, individual student level, and item level. At each level, highlights of appropriate use and cautions will be provided.

Group-Level Analysis

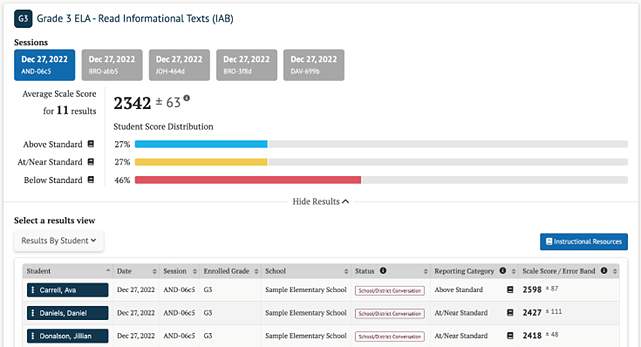

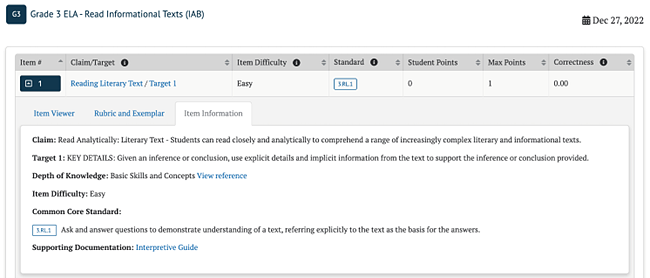

As shown in figure 1 below, Ms. Garcia’s classes had an average scale score of 2342 on the Grade 3 ELA – Read Informational Texts IAB. She can also see the error band (Standard Error of the Mean) of +/- 63 points. This means that if a test of parallel design were given to these students on another day without further instruction, their average scale score would likely fall between 2279 (2342 minus 63 points) and 2403 (2342 plus 63 points).

Figure 1. Group-Level View of IAB Results

Ms. Garcia can see from the Student Score Distribution section that 27% of her students scored within the Above Standard reporting category, 27% of the students scored within the At/Near Standard reporting category, and 46% scored within the Below Standard category.

From the group results page, Ms. Garcia can access links to supports through the “Instructional Resources” button. The link leads to interim Connections Playlist for that specific IAB - each IAB has an associated Tools for Teachers Connections Playlist. Connections Playlists are developed by teachers for teachers. Each playlist shows a Performance Progression that identifies the attributes of Below/Near/Above performance and links to Tools for Teachers lessons that support the skills covered in the associated interim assessment. In addition to the Smarter Balanced Connections Playlists, districts and schools have the option to upload links to local district or school resources within the Reporting System.

By selecting the “Instructional Resources” button, Ms. Garcia can access resources for all reporting categories. Ms. Garcia can find:

- instruction designed to enrich and expand their skills; and

- instruction based on student needs.

See the Tools for Teachers section for more information.

Group Item-Level Analysis

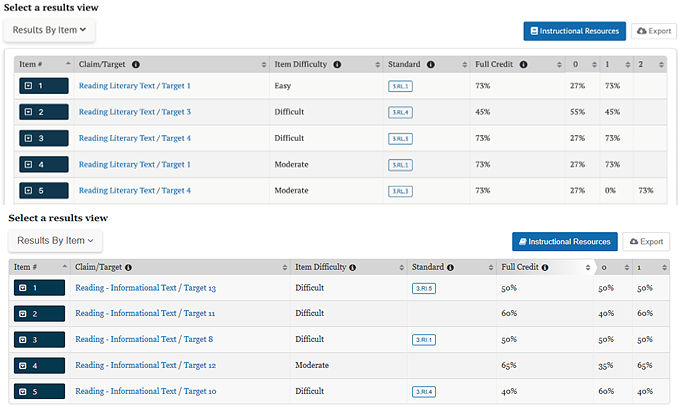

For each item in the IAB, Ms. Garcia can see the claim, target, item difficulty, the relevant standard assessed, and the proportion of students who received full credit, as well as the proportion of students at each score point.

For example, as shown in figure 2, item #2 is noted as “Difficult”. Ms. Garcia sees that 45% of her students received full credit on Item #2. Continuing in the same row, she can also see that 55% of her students did not receive any points and 45% received the maximum of one point. This information indicates a need for additional support.

Figure 2. Item-Level View of IAB Results: Group Scores

Ms. Garcia can also sort on the Full Credit column to quickly identify test items that students performed well on and items where students struggled.

Student-Level Analysis

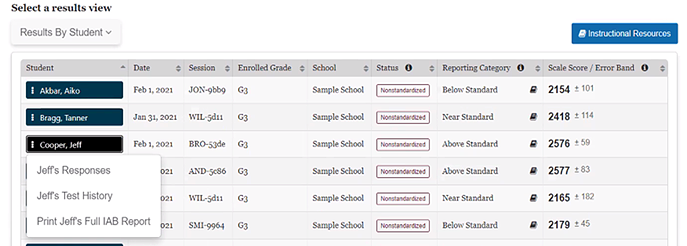

To learn more about her students’ individual needs, Ms. Garcia can view “Results by Student” as shown in figure 3 below. The “Reporting Category” column is sortable, so Ms. Garcia can easily identify the students who performed Above, Near, or Below Standard. She can use that information during small-group time in her classroom.

Using the test results for students, combined with her knowledge of student performance on classroom assignments, homework, and other observations, Ms. Garcia makes inferences about her students’ ability to read and comprehend informational text. She is confident that students who scored in the Above Standard category have mastered the skills and knowledge taught in the classroom and are in no need of additional support on that content. For those students, she uses an idea from the Interim Connections Playlist (ICP) to offer an extra challenge along with some additional independent reading time.

Next, Ms. Garcia considers how to support the students who scored in the Below Standard category, suspecting that they might need additional instruction. Ms. Garcia remembers that the IAB is only one measure, and it should always be used in combination with other information about her students. She knows that a student who has never had difficulty comprehending informational text may have been having a bad day when the interim was administered. With that caveat in mind, Ms. Garcia reviews the reporting categories and chooses an instructional resource from the ICP to support the students who scored Below Standard in a collaborative learning group.

Figure 3. Results by Student View of IAB Results

As shown in figure 3, Ms. Garcia can select an individual student from the group list (by selecting the blue box with the student’s name) to examine the student’s performance on items within the IAB. When an individual student is selected, Ms. Garcia can select the option to view the student’s responses and a screen showing each item in the IAB is displayed as shown in figure 4 below.

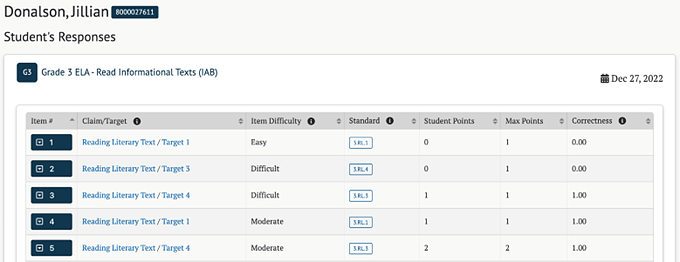

Figure 4. Individual Student Item-Level View of IAB Information

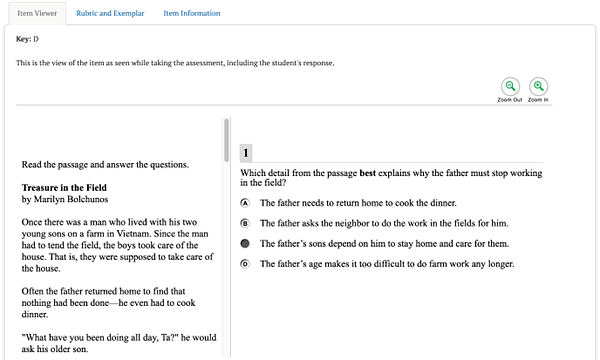

Ms. Garcia selects item number 1, and the following three tabs appear Item Viewer, Rubric and Exemplar, and Item Information as shown in figure 5 below.

Figure 5. Item-Level Tabs

By examining student responses in the Item Viewer tab, Ms. Garcia can identify patterns in student responses that might reveal common misconceptions or misunderstandings. If several students chose the same incorrect response, for example, Ms. Garcia can isolate areas to revisit with her class.

As shown in figure 6 below, the Rubric and Exemplar tab shows the exemplar (i.e., correct response), any other possible correct responses to the item, and a rubric that defines the point values associated with specific responses. For multiple-choice questions, the key or correct response is provided.

Figure 6. Rubric and Exemplar Tab

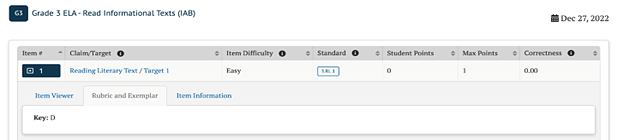

As shown in figure 7 below, the Item Information tab describes the claim, assessment target, domain, and standard that the item assesses. This tab also provides the Depth of Knowledge, the item difficulty, and links to other supporting documentation.

Figure 7. Item Information Tab

Claims, Targets, Domain, and Standards

Claims and targets are a way of classifying test content. The claim is the major topic area. For example, in English language arts, reading is a claim. Within each claim, there are targets that describe the knowledge and skills that the test measures. Each target may encompass one or more standards from the CCSS. Within the Reading claim, for example, one of the targets is concerned with finding the central idea in a text. Domains are large groups of related standards in the Mathematics CCSS (e.g., Geometry, Statistics and Probability, Ratios and Proportional Relationships). More information about the claims, targets, and standards can be found on the Development and Design page of the Smarter Balanced website.

Domains and Standards for CAST Interim Assessments

The California Next Generation Science Standards (CA NGSS) are organized into three major categories, called science domains, representing Earth and Space Sciences (ESS), Life Sciences (LS), and Physical Sciences (PS). Each domain includes a set of learning standards, called performance expectations (PEs), that describe what a student should know and be able to do. The CA NGSS include a fourth subdomain titled Engineering, Technology, and the Applications of Science (ETS). The ETS subdomain has its own PEs as well. ETS PEs are assessed within the context of the three other science domains. PEs represent an integration of science content knowledge, applicable skills, and broad ideas applicable to all scientific disciplines. More information on the CA NGSS and its PEs can be found on the NGSS for California Public Schools, K-12 page of the CDE’s website.

Domains and Task Types for ELPAC Interim Assessments

The English Language Proficiency Assessments for California (ELPAC) Interim Assessments are designed to support teaching and learning throughout the academic year for EL students. These assessments focus on four domains: Listening, Speaking, Reading, and Writing. Each domain assesses specific language skills, such as comprehension, verbal expression, reading proficiency, and writing abilities. The task types for each domain are aligned with the 2012 California English Language Development (ELD) Standards. The ELPAC Interim Assessments cover grade levels from kindergarten through grade twelve, providing valuable insights into students’ progress in acquiring English language proficiency (ELP).

Depth of Knowledge

Depth of Knowledge (DOK) levels, developed by Webb (1997), reflect the complexity of the cognitive process demanded by curricular activities and assessment tasks (table 1). Higher DOK levels are associated with activities and tasks that have high cognitive demands. The DOK level describes the kind of thinking a task requires, not if the task is difficult in and of itself.

| DOK Level | Title of Level |

|---|---|

| 1 | Recall |

| 2 | Skills and Concepts |

| 3 | Strategic Thinking |

| 4 | Extended Thinking |

Depth of Knowledge for CAST Interim Assessments

Depth of knowledge (DOK) levels, developed by Webb (1997), reflect the complexity of the cognitive process demanded by curricular activities and assessment tasks. There are four DOK levels (table 2), ranging from level one, which involves the lowest cognitive demand and thought complexity, to level four, which involves the highest cognitive demand and thought complexity. The DOK level describes the kind of thinking a task requires and is not necessarily an indicator of difficulty.

| DOK Level | Title of Level |

|---|---|

| 1 | Recall and Reproduction |

| 2 | Working with Skills and Concepts |

| 3 | Short-Term Strategic Thinking |

| 4 | Extended Strategic Thinking |

Performance-Level Descriptors for ELPAC Interim Assessments

The performance level descriptors (PLDs) are based on the English Language Development (ELD) Standards proficiency levels, which describe how English learner (EL) students demonstrate use of language at different stages of development. The PLDs in table 3 indicate the skill degree and variation of the items ranging from Beginning to Develop to Well Developed. Because of the nature of language and the English Language Proficiency Assessments for California (ELPAC) task types, some items measure more than one PLD. Note the values in the PLD Key column are the list of values assigned to each item within the ELPAC Interim Assessment in CERS, indicating the various combinations of PLDs assessed by the item. The information in the Description column describes the PLDs covered by that item.

| PLD Key | Description |

|---|---|

| 1 | Targets the Beginning to Develop (PLD 1) skills in the assessed domain |

| 2 | Targets the Somewhat Developed (PLD 2) skills in the assessed domain |

| 3 | Targets the Moderately Developed (PLD 3) skills in the assessed domain |

| 4 | Targets the Well Developed (PLD 4) skills in the assessed domain |

| 5 | Targets the Beginning to Develop to Somewhat Developed (PLDs 1 and 2) skills in the assessed domain |

| 6 | Targets the Beginning to Develop to Moderately Developed (PLDs 1 through 3) skills in the assessed domain |

| 7 | Targets the Beginning to Develop to Well Developed (PLDs 1 through 4) skills in the assessed domain |

| 8 | Targets the Somewhat Developed to Moderately Developed (PLDs 2 and 3) skills in the assessed domain |

| 9 | Targets the Somewhat Developed to Well Developed (PLDs 2 through 4) skills in the assessed domain |

| 10 | Targets the Moderately Developed to Well Developed (PLDs 3 and 4) skills in the assessed domain |

Item Difficulty

Each Smarter Balanced test item is assigned a difficulty level based on the proportion of students in the field-test sample who responded to that item correctly. The students who responded to the item are referred to as the reference population. The reference population determines the difficulty level of a test item. (Note: The reference population for an item consists of all the students who took the test the year the item was field-tested. Depending on when the item was field tested, the reference population may refer to students who took the spring 2014 Field Test or a subsequent summative assessment that included embedded field-tested items.)

Test items are classified as easy, moderate, or difficult based on the average proportion of correct responses of the reference population, also referred to as the average proportion-correct score (table 4). The average proportion-correct score can range from 0.00 (no correct answers meaning the item is difficult) to 1.00 (all correct answers meaning the item is easy).

| Difficulty Category |

Range of Average Proportion Correct (p-value) Score (minimum – maximum) |

|---|---|

| Easy | 0.67 – 1.00 |

| Moderate | 0.34 – 0.66 |

| Difficult | 0.00 – 0.33 |

For items worth more than 1 point, the average proportion correct score is the item’s average score among students in the reference population divided by the maximum possible score on the item. For example, if the average score for a 2-point item is 1, its average proportion correct score is 1 divided by 2, or 0.50. In this example, that test item would be rated as moderate on the item difficulty scale.

Easy items are answered correctly by at least 67% of the students in the reference population.

Moderate items are answered correctly by 34-66% of the reference population.

Difficult items are answered correctly by 33% or fewer of the reference population.

As previously shown in figure 7, item #1 is aligned to Standard 3.RL.5 (Use text features and search tools (e.g., key words, sidebars, hyperlinks) to locate information relevant to a given topic efficiently) and assesses Reading claim, Target 13 (TEXT STRUCTURES/ FEATURES: Relate knowledge of text structures or text features (e.g., graphics, bold text, headings) to obtain, interpret, or explain information). This information tells Ms. Garcia what concepts and skills the item assesses.

Ms. Garcia can also see from this tab that Item #1 is classified as difficult. Ms. Garcia can include item difficulty in her inferences about student performance because item classification provides her with additional context when reviewing test results and considering instructional implications.

Student scores on more difficult items should be treated differently from the scores on less difficult items. For example, if half of the students get an item wrong, Ms. Garcia should avoid making generalized inferences about student needs. Instead, Ms. Garcia can account for the item difficulty when drawing conclusions from test results to determine what students know and can do. If the item is rated difficult, Ms. Garcia’s conclusions about her students may differ from conclusions based on an item rated easy. If half of the students answer an easy item incorrectly, she may decide to re-teach the concepts addressed in that item. On the other hand, if half of her students got a difficult item incorrect, she may choose to address that result by encouraging additional practice on this type of item.

Item Difficulty for CAST Interim Assessments

Each California Science Test (CAST) interim assessment item is assigned a difficulty level based on the proportion of students in the field test sample who responded to that item correctly. The students who responded to the item are referred to as the reference population. The reference population determines the difficulty level of a test item.

Test items are classified as easy, moderate, or difficult on the basis of the average proportion of correct responses of the reference population, also referred to as the average proportion-correct score (table 5). The average proportion-correct score (p-value) can range from 0.00 (no correct answers, meaning the item is difficult) to 1.00 (all correct answers, meaning the item is easy).

| Difficulty Category | Range of Average Proportion Correct (.-value) Score |

|---|---|

| Easy | 0.67–1.00 |

| Moderate | 0.34–0.66 |

| Difficult | 0.00–0.33 |

Item Difficulty for ELPAC Interim Assessments

Item-level results provided in the California Educator Reporting System (CERS) contain information about a group’s performance and each student’s performance on each item on the English Language Proficiency Assessments for California (ELPAC) Interim Assessment. For each item, information is provided for the group being evaluated.

Test items are classified as easy, moderate, or difficult on the basis of the average proportion of correct responses of the reference population, also referred to as the average proportion-correct score. The average proportion-correct score (p-value) can range from 0.00 (no correct answers, meaning the item is difficult) to 1.00 (all correct answers, meaning the item is easy). These difficulty categories indicate how difficult an item is relative to the Summative ELPAC item pool for each grade level or grade span. For each domain, difficulty categories were created by ordering the items from easiest to most difficult in the item pool. All of the items were then assigned a difficulty category based on the descriptions shown in Table 6.

| Difficulty Category | Description |

|---|---|

| Easy | These items have a p-value higher than 75% of items in the bank. |

| Moderate | These items have a p-value lower than the easy items and higher than the difficult items. |

| Difficult | These items have a p-value lower than 75% of items in the bank. |

Key and Distractor Analysis

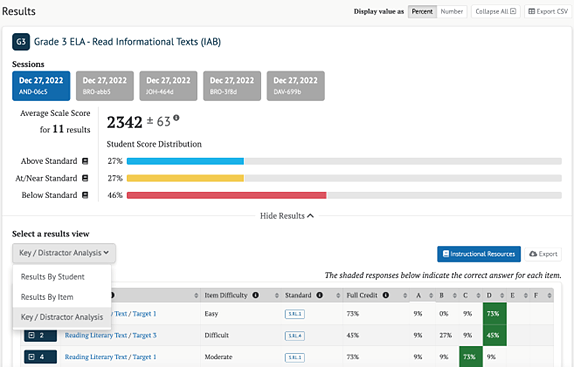

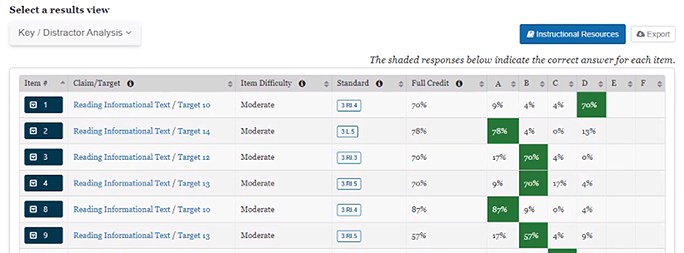

For selected response items, a teacher can see whether a large number of students selected a particular incorrect response, which may signal a common misconception. This report is available by selecting Key/Distractor Analysis from the dropdown in the “Select a results view” as shown in figure 8 below.

Figure 8. Select to View Key/Distractor Analysis

As shown in figure 9 below, the Key and Distractor Analysis view displays information for multiple-choice and multi-select items. The teacher can see the claim, target, item difficulty, and related standard(s) for each item, the percentage of students who earned full credit for each item, and the percentage of students who selected each answer option. (For multi-select items that have more than one correct answer, these percentages may not add up to 100 percent.) The teacher can sort the list by the percentage of students who earned full credit to see those items on which students had the greatest difficulty and then determine whether there were incorrect answers that many students selected. (The correct answers are shaded.)

Figure 9. Key and Distractor Analysis View

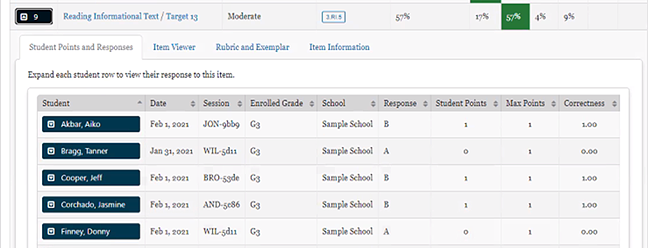

Ms. Garcia identifies Item 9 as one on which 17% of the students selected the same incorrect answer, A. To learn more about this item, the teacher can select the item number and see four tabs, Student Scores and Responses, Item Viewer, Rubric and Exemplar, and Item information as shown in figure 10 below. From the Student Scores and Responses tab, the teacher can sort on the Response column to see which students incorrectly selected option A. By selecting the Item Viewer tab, Ms. Garcia can see all the response options and, using other information about the students based on classroom discussion and assignments, begin to form hypotheses about why those students may have chosen the incorrect response option. She may decide to post that item and have the students discuss their reasoning aloud.

Figure 10. Key and Distractor Analysis Item Details Tabs

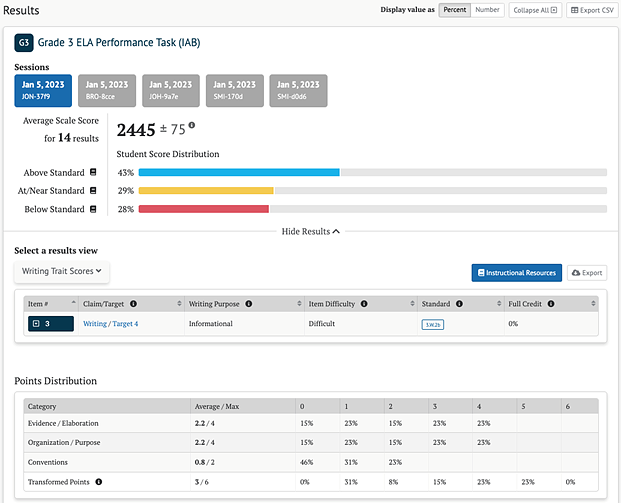

Writing Trait Score Report

Each Performance Task on the ELA Interim Comprehensive Assessment (ICA) and selected ELA IABs includes a full write or essay question. For these tests, a Writing Trait Score is provided, as shown in figure 11 below, that allows teachers to analyze the strengths and weaknesses of student writing based on student performance on the essay question.

Figure 11. Group Report on the Essay Question

This Performance Task report provides the information found on other group summary reports (average scale score and error band, student score distribution and item information). In addition, it indicates the writing purpose of the essay question. The purpose may be argumentative, explanatory, informational, narrative, or opinion depending on the grade level of the assessment.

The report provides the average points earned by the group of students and maximum number of points for each writing trait. The three writing traits describe the following proficiencies in the writing process.

- Organization/Purpose: Organizing ideas consistent with purpose and audience

- Evidence/Elaboration: Providing supporting evidence, details, and elaboration consistent with focus/thesis/claim, source text or texts, purpose and audience

- Conventions: Applying the conventions of standard written English; editing for grammar usage and mechanics to clarify the message

There is a maximum of four points for organization/purpose, four points for evidence/elaboration, and two points maximum for conventions.

The report also displays the Transformed Points value that is calculated by adding the Conventions score to the average of the Organization/Purpose and Evidence/Elaboration scores. These two values represent two dimensions that are used to compute the student’s overall scale score and the Claim 2 – Writing reporting category for the ELA ICA.

A student’s score is computed as follows:

Organization/purpose: 4 points earned

Evidence/elaboration: 1 points earned

Conventions: 2 points earned

Average = (4+1)/2 = 2.5, which is rounded up to 3 points, 3 + 2 = 5 Transformed Points

The report also provides the percentage distribution of students by the number of points they earned for each writing trait and the percentage of students who earned each possible number of Transformed Points.

Training guides for hand scoring are available in the Interim Assessment Hand Scoring System. The guides include the rubrics and annotated scored student responses that are used to determine student scores.

The Performance Task Writing Rubrics are also available in the links below:

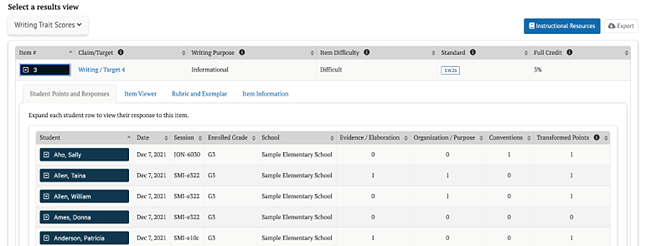

As shown in figure 12 below, Ms. Garcia can view the writing trait scores for individual students by selecting the standard in the blue box for item 3. This displays a report on individual student performance by writing trait and Transformed Points earned. The teacher can sort by Transformed Points to quickly identify students who performed well and those who need additional support. The Student Scores and Responses tab allows the teacher to read each student’s essay after selecting the blue box with the student’s name. The Item Viewer displays the essay question as it appeared on the test. The Rubric and Exemplar tab provides the writing rubrics, and the Item Information tab provides information about the claim, target, standard, item difficulty, and Depth of Knowledge.

Figure 12. Individual Student Report on the Essay Question

As Ms. Garcia reviews these results, she bears in mind all the same caveats about weighing student scores in the context of other evidence she has collected on her students, factoring in the difficulty of the test item and manner of test administration and recognizing that no test or single test question should be used as the sole indicator of student performance. Ms. Garcia considers the report and the rubric along with other writing assignments students have turned in that year. She plans additional support for writing in class and shares practice ideas with her students and their families as well.