Guidelines for Appropriate Use of Test Results

Many variables influence test results, and it is important that educators understand the following guidelines when analyzing assessment results to inform educational decisions.

Test Results Are Not Perfect Measures of Student Performance

All assessments include measurement error; no assessment is perfectly reliable. An error band is included with a student’s test score as an indicator of its reliability. A statistical calculation is made by the system, determining how much worse or better the student could be expected to do on the assessment if the student took the assessment multiple times. Since performance could increase or decrease, the error band is represented on the report by a “±“ followed by a number.

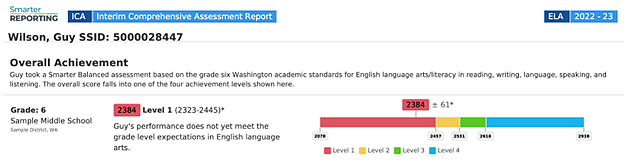

For example, as shown in figure 1, a grade six student takes the ELA Interim Comprehensive Assessment and receives a score of 2384 with an error band of ± 61 points. This means that if the student took an assessment with a similar level of difficulty again without receiving further instruction, using either a different sample of test questions, or taking the same assessment on a different day, their score would likely fall between the range of 2323 (2384 minus 61) and 2445 (2384 plus 61).

Figure 1. Student’s Scale Score and Error Band

Measurement error in testing may result from several factors, such as the sample of questions included on the assessment, a student’s mental or emotional state during testing, or other conditions under which the student took the assessment. For example, the following might affect a student’s test performance:

- Student factors—whether the student was tired, hungry, or under stress

- Classroom factors—noise or temperature

- Technical issues—such as those with the student testing device

In addition, any items that require hand scoring create additional variability due to interpretive differences and human error.

Use the Entire Assessment in Combination with Other Indicators

Items in an interim assessment vary in format, content, target skill, and difficulty level. While it may be possible to make some inferences about what students know and can do based on their performance on a single test item, students’ performance on the entire assessment is a better indicator of students’ knowledge and skills.

All test results include some degree of error. Therefore, it is critical to use results from an assessment in combination with other information about student learning in a balanced manner. This can encompass student work on classroom assignments, quizzes, observations, and other forms of evidence.

Educators may use test results as one part of an “academic wellness check” for a student. The test results, when analyzed alongside additional information about the student, can strengthen conclusions about where the student is doing well and where the student might benefit from additional instruction and support.

Validity of Results Depends on Appropriate Interpretation and Use

The interim assessments were designed to be used by educators to evaluate student performance against grade-level standards. When used as designed, results from the interim assessments can provide useful information to help educators improve teaching and learning for their students. However, any inferences made from the test results may not be valid if the assessment is used for purposes for which it was not designed and validated.

Manner of Administration Impacts the Use of Results

Teachers may use the interim assessments in several ways to gain information about what their students know and can do. The test administrator or test examiner must determine whether the assessment will be administered in a standardized or nonstandardized manner of administration. Nonstandardized is the default setting. The manner of administration is displayed in the California Educator Reporting System with the student’s results.

When combined with other forms of evidence, results from standardized administration can be reasonably used to gauge student knowledge and growth over time after a period of instruction because those results represent individual student knowledge. Standardized administration of the interim assessments can be used both as an assessment of learning and an assessment for learning.

Nonstandardized administration of the interim assessments is done primarily for learning. Results from a nonstandardized administration should be used with caution when evaluating an individual student. Individual student scores may be produced, but if a student is working with other students, the individual student scores are not reflective of the individual student’s ability. However, nonstandardized administrations may yield information that cannot be collected during a standardized administration, such as hearing a student’s thought process as they discuss a problem aloud. The goal of a nonstandardized administration is to learn where students are succeeding and where they might need more support during instruction.